In The Complex World, a book written by David Krakauer as an intro to the foundations of Complexity Theory, a striking passage declares in the Chapter on Information, Computation, and Cognition: “information and information processing lie at the heart of the sciences of complexity.” This powerful statement not only encapsulates the essence of complexity science but also invite to explore how foundational ideas from information theory and historical philosophy have reshaped our understanding of the intricate systems that govern nature, technology, and society.

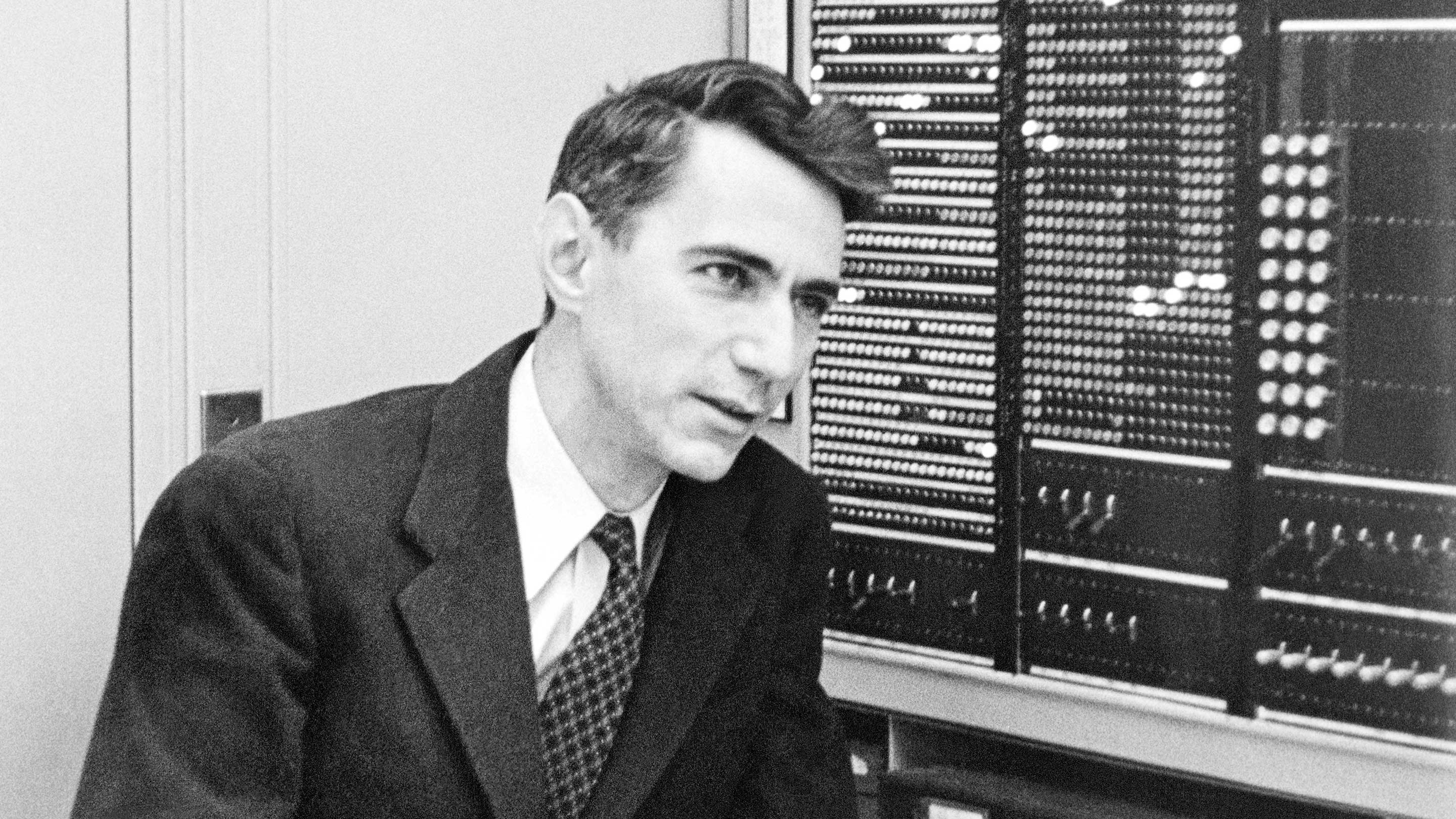

At the forefront of this intellectual revolution stands Claude Shannon, whose seminal 1948 work laid the groundwork for modern information theory. Shannon introduced the concept of quantifying information through measures such as entropy and redundancy, offering a robust mathematical framework to analyse how messages are encoded, transmitted, and decoded. His groundbreaking insights transformed the way we understand communication and paved the way for examining complex systems through the lens of information exchange.

Building on Shannon’s legacy, early pioneers like Norbert Wiener in cybernetics explored how feedback loops and control mechanisms underpin both living organisms and machines. These studies revealed that all systems — whether biological, electronic, or social — operate through continuous cycles of processing and exchanging information. This realisation led to a shift in perspective: rather than viewing components in isolation, researchers began to see the dynamic interactions and feedback as the true drivers of emergent behavior.

Central to complexity science is surely the idea that complex systems are composed of numerous interacting parts whose collective behavior gives rise to phenomena that are not apparent from the properties of individual components. The complexity of information itself reflects the system’s potential for emergence. As information becomes more intricate, its diverse possibilities create the fertile ground for spontaneous order and structure to arise. In this sense, the complexity embedded within information mirrors the layered reality it represents.

Analytically, viewing systems as networks of information processors has led to the development of powerful computational models. Cellular automata, agent-based simulations, and network analyses allow scientists to investigate how simple local rules of interaction can culminate in sophisticated global patterns. These models quantify the flow of information and reveal that small changes in how data is processed can lead to dramatic shifts in system behavior—underscoring the role of information in driving emergent phenomena.

Furthermore, this perspective is enriched by concepts such as Holland’s signals and boundaries, which describe how interactions at the edges of systems give rise to organised patterns. Signals act as the carriers of information across boundaries, defining the interfaces where local interactions take place. These interactions are critical in establishing the rules by which complex behaviors emerge, demonstrating that even at the micro-level, the quality and complexity of information can have far-reaching implications on the overall structure and dynamics of a system.

Ultimately, the convergence of Shannon’s revolutionary insights, the pioneering work in cybernetics, and the evolution of systems theory all lead us to the compelling conclusion mentioned above: information and information processing lie at the heart of the sciences of complexity. This understanding not only provides a unifying framework across disciplines but also highlights how the inherent complexity of information — measured in its entropy and intricate signals —mirrors and shapes the emergent realities of our world.

Leave a Reply