When I first designed what later became Padi UMKM, I did not do it in a boardroom. I did it at home, during long months of WFH in the middle of the Covid-19 pandemic. I drew the system on papers spread on the floor. At that time, my head was full of ideas about ecosystems, complexity theory, and complexity economics. I was not thinking about building another digital platform. I was thinking about how economic coordination itself breaks down under systemic shock, and how new coordination patterns might emerge when old ones collapse. In that sense, Padi UMKM was born less from a product mindset than from an ecosystem mindset, with complexity theory consciously in the background.

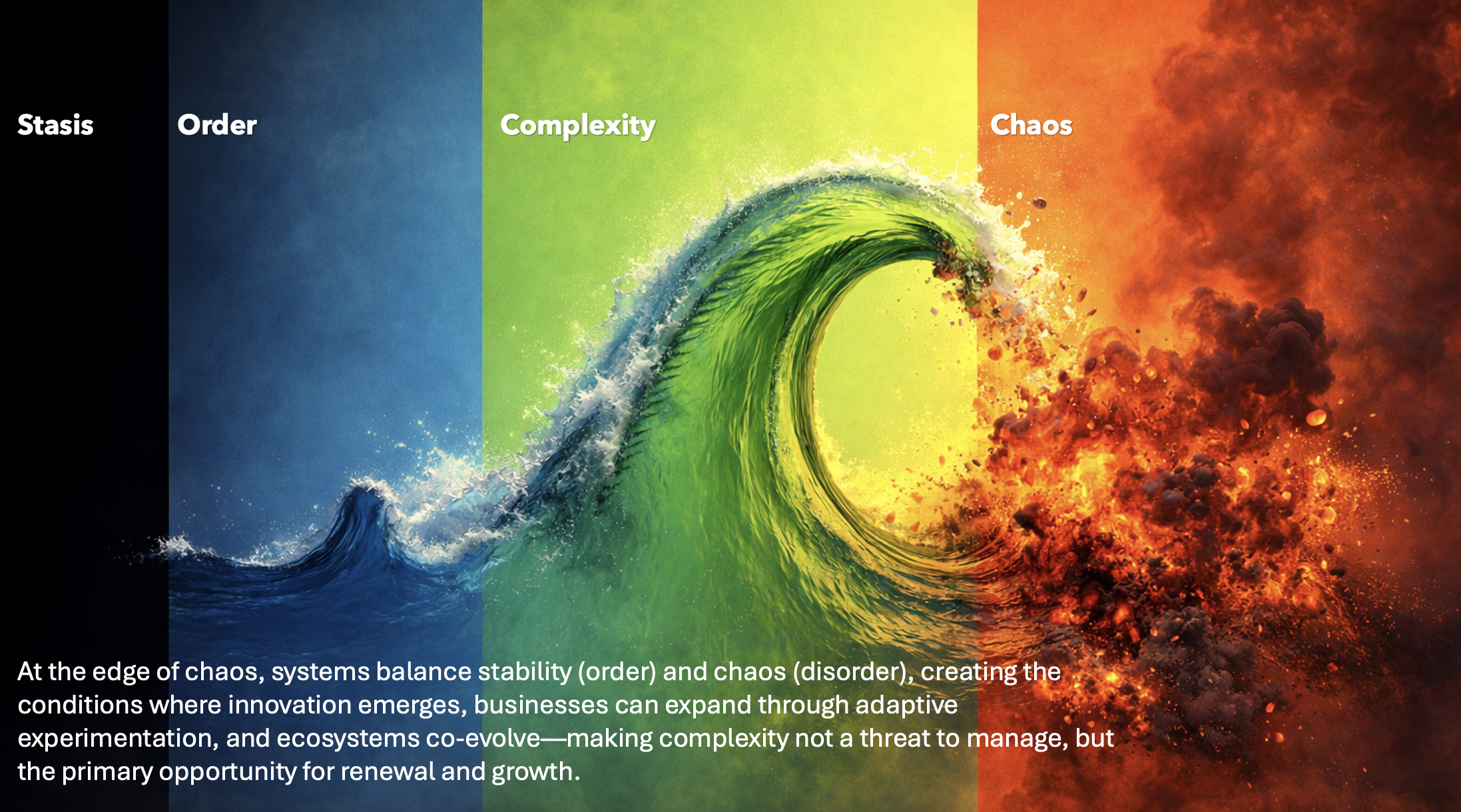

When the pandemic hit, what collapsed was not only the economy. What collapsed was the coordination logic of the economy. Supply chains broke, demand evaporated, SMEs lost access to markets, and institutions discovered that their standard operating procedures were designed for stability, not for systemic disruption. Many organisations reacted by accelerating digital projects, launching platforms, and optimising internal processes. That helped, but it did not address the deeper problem. The economic ecosystem itself had lost its organising structure. Actors that were rational in isolation could no longer produce coherent outcomes collectively. This is how complex systems behave under stress: when established coordination patterns fail, local rationality no longer aggregates into systemic order.

Padi UMKM did not start as a brilliant digital product idea. It started as a response to a coordination failure across a fragmented system of SOEs, SMEs, banks, regulators, ministries, and development agencies. All were acting with good intentions, yet through incompatible logics, timelines, and mandates. The system was not short of initiatives; it was short of coherence. In complexity terms, the economy had been pushed far from equilibrium, and the challenge was not optimisation but reorganisation. What was needed was not another tool, but a new pattern of interaction among heterogeneous agents.

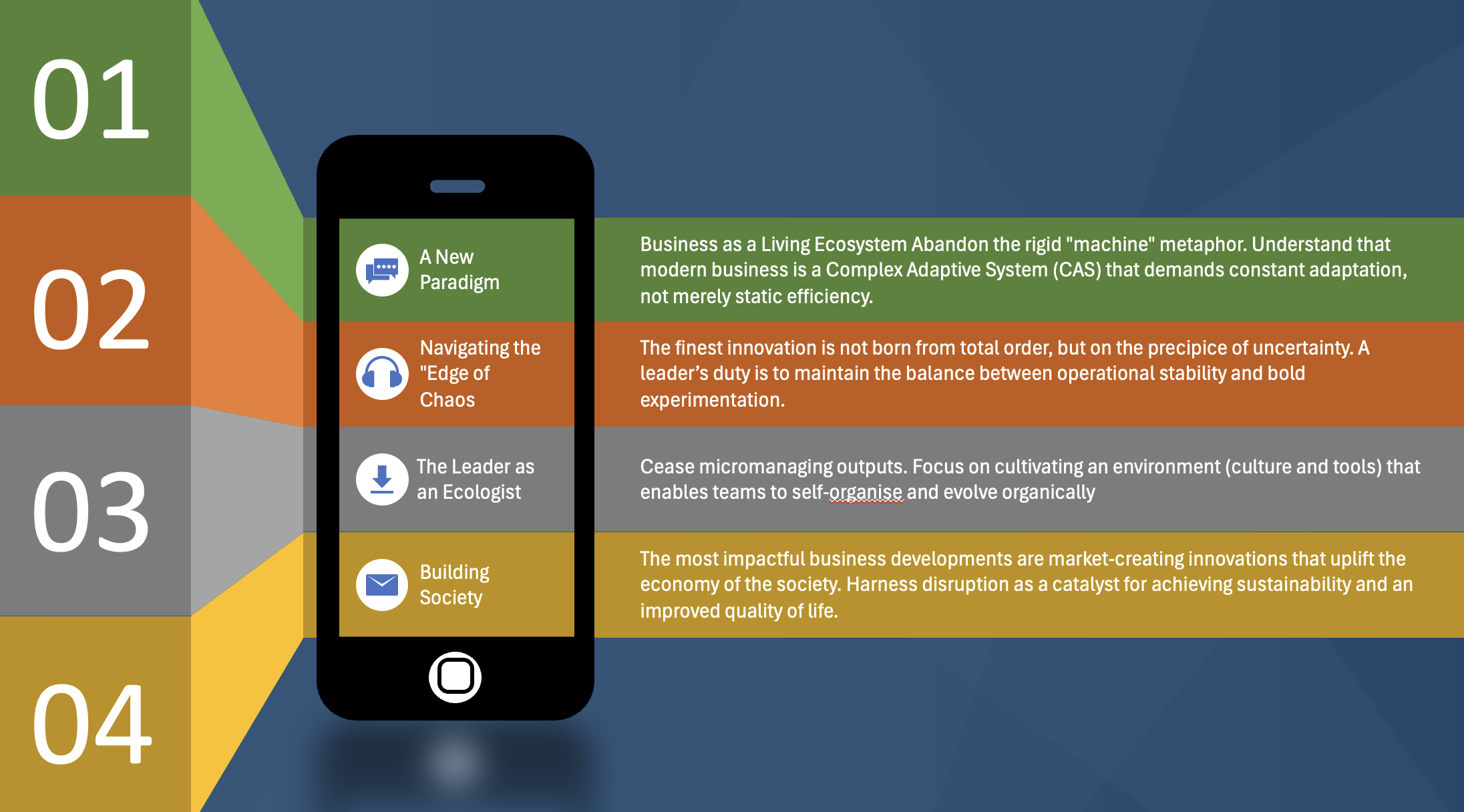

The real innovation of Padi UMKM was therefore not the platform. The platform was the easy part. The digital workforce of Telkom Group can design platforms; that is an operational capability. The platform was necessary, and it became the core infrastructure of the ecosystem, but it was not the breakthrough. The breakthrough was the deliberate redefinition of roles within the economic system. SOEs must reposition their procurement operation into a capability of creating new market, i.e. an SME-based market structure. SMEs were not framed as beneficiaries of aid, but as economic agents that could be structurally integrated into formal procurement and value creation. Banks and financial institutions were not treated merely as lenders, but as part of an enabling architecture that combined financing with capability development and pathways to export. What changed was not a feature set. What changed was the pattern of interaction between economic actors.

The formal launching of Padi UMKM itself was not initiated by Telkom or by the Ministry of SOEs. It was planned within the nationwide BBI (Bangga Buatan Indonesia) program, because the central government needed a real, executable instrument to accelerate domestic economic circulation under crisis. Telkom showed a commitment to develop the platform, even though it was still imperfect at that time. The urgency was national, not corporate. This matters, because it positioned Padi UMKM from the beginning not as a corporate product launch, but as a systemic intervention embedded in a national recovery narrative. The early external promotion of Padi UMKM, beyond the internal SOE environment, was also driven by the BBI program. Over time, almost by systemic selection rather than by design, Padi UMKM became the de facto e-commerce infrastructure for BBI, as other platforms could not fit the specific institutional and ecosystemic roles required by the program.

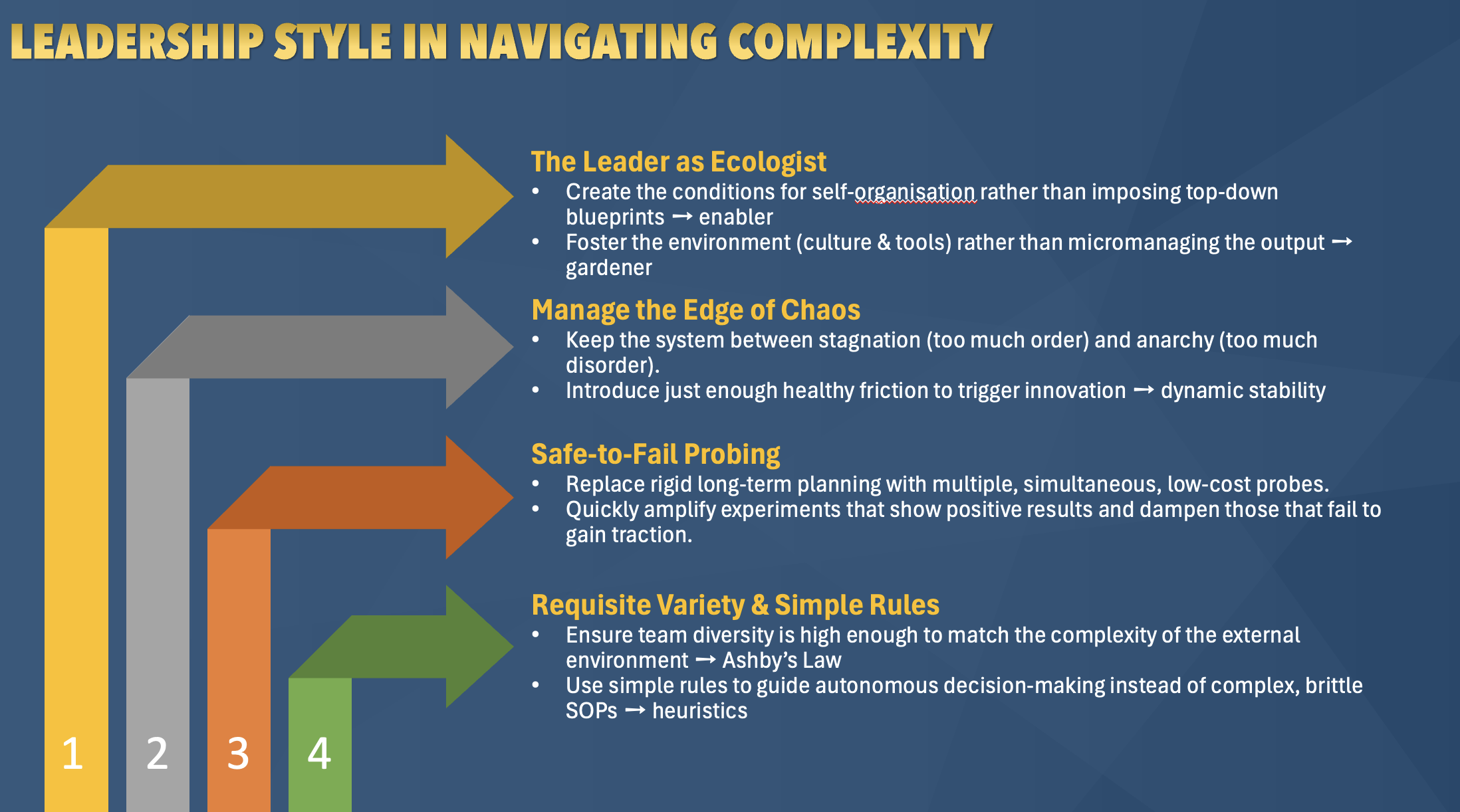

From the beginning, we made a counterintuitive choice in the way the system was governed. Telkom deliberately limited its role to being the product and platform owner. The ecosystem itself was not branded as Telkom’s program. The community was symbolically owned by the Ministry of SOEs and by SOEs collectively. Even the name Padi UMKM did not originate from Telkom. This was not a political compromise; it was a strategic design choice grounded in complexity thinking. In complex systems, ecosystems tend to collapse when one actor over-claims ownership. When the platform owner also claims to own the ecosystem, other actors reduce their commitment, hedge their participation, or quietly resist. By stepping back from symbolic ownership, Telkom created space for other institutions to step forward. The platform provided the infrastructure, but the legitimacy of the ecosystem was deliberately distributed across actors.

At some point, something structurally interesting happened. The initiative crossed a threshold where no single actor could kill it anymore. The CEO of Telkom could not simply shut it down because the ecosystem had become institutionally embedded beyond Telkom. The Minister of SOEs could not dismantle it easily because it had become part of the official narrative of national economic recovery. The President could not disown it because it had been publicly positioned as a success story through BBI, PEN, and related programs. This was not political theatre. This was the moment when the system acquired path dependence. Once an initiative becomes embedded across multiple layers of institutional narrative and governance, it ceases to be a project and becomes part of the system itself. At that point, you are no longer managing a prograe. You are dealing with a living economic structure.

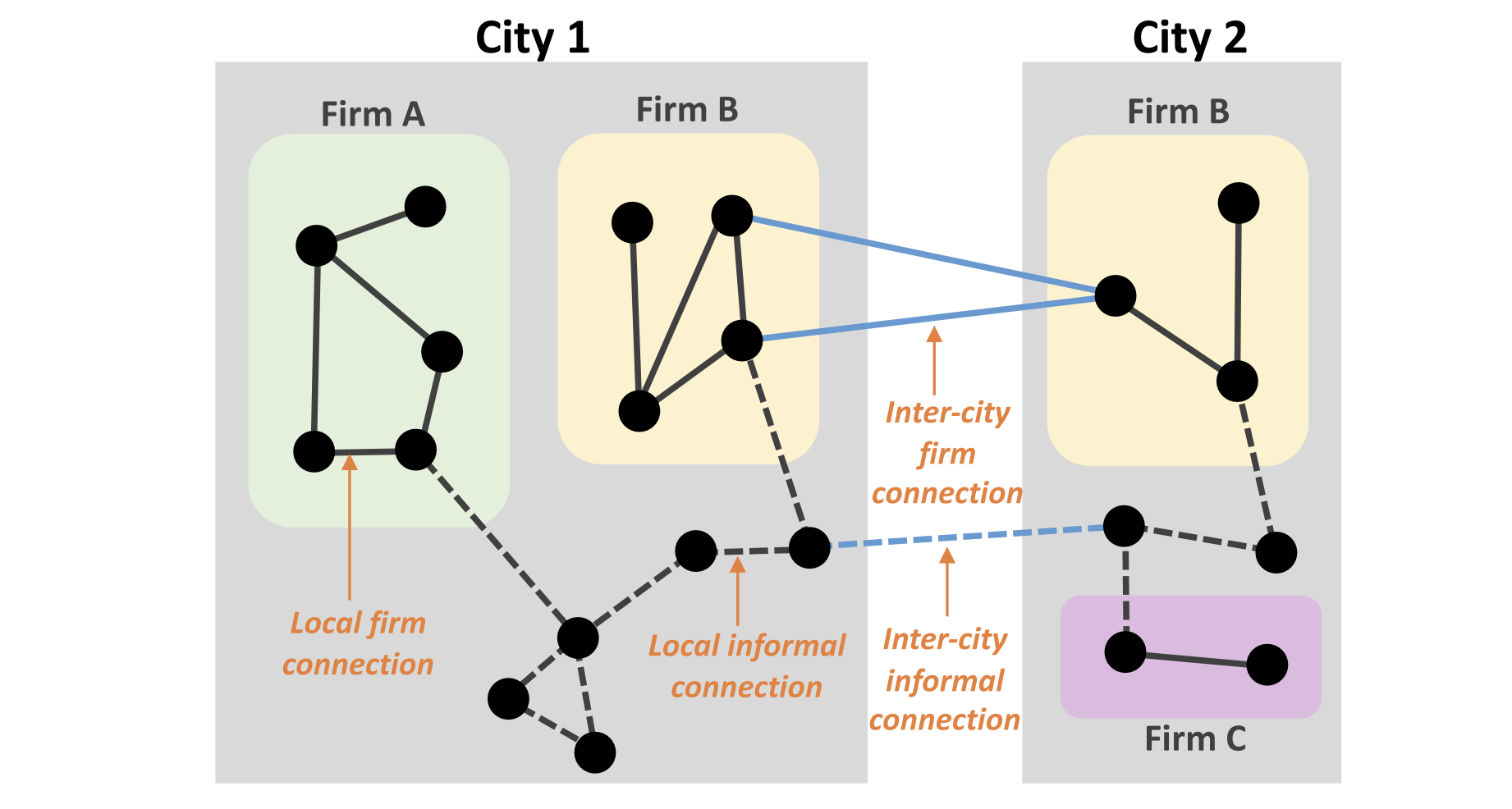

Value in Padi UMKM did not come from transactions alone. It emerged from the coupling of multiple layers of interaction. Transactions between SOEs and SMEs were reinforced by access to credit, by certification mechanisms that enabled formal participation, by development programmes that upgraded SME capabilities, and by pathways to export markets. None of these elements, on their own, would have been transformative. The transformation emerged from their interaction. This is how complex economies create value: not through linear pipelines, but through ecosystems in which different forms of capital, i.e. financial, institutional, social, and operational, reinforce one another over time.

Internally in Telkom, there was a structural separation of roles that proved critical. The Digital Business Directorate (DDB) operated at the product and business level. Its logic was operational: build, run, scale, monetise, and maintain the platform. Even as the platform owner and economic keystone, it remained only one agent within the broader ecosystem. In parallel, the Synergy Subdirectorate under the Strategic Portfolio Directorate worked at the ecosystem level. This role was not about features, roadmaps, or KPIs. It was about sensing emergent patterns of collaboration, mediating conflicts between institutions, and navigating collisions between policy signals and organisational incentives. In the early phase, the Synergy team also played a foundational role in organising cross-SOE agreements, preparing the multi-actor launch, embedding Padi UMKM within the BBI program, and connecting it with multiple SME build-up initiatives involving the Ministry of SMEs, the Ministry of Trade, and other institutions. This work was not linear project management; it was ecosystem orchestration under uncertainty.

In Indonesia’s context, the interaction between SOEs, SMEs, banks, and regulators is not merely complex; it is quasi-chaotic. Mandates overlap, incentives conflict, and policies evolve at different speeds and under different political pressures. In such an environment, precise prediction is an illusion. What becomes possible instead is navigation: sensing where constructive patterns of emergence are forming, dampening destructive feedback loops before they escalate, and shaping the boundaries within which the ecosystem evolves. This is not classical management. This is leadership under complexity.

As a result of its early success, there was a moment when the government, again through the BBI programme, asked to expand Padi UMKM to cover all government agencies (K/L/PD). On paper, this looked like success, with an enormous projected GMV. In reality, it carried a systemic risk. Full integration into the broader government procurement apparatus would have imposed rigid compliance structures and administrative constraints that could have frozen the adaptive dynamics that made the ecosystem work. The decision to return that expansion to LKPP, while positioning Telkom only as a platform provider for LKPP, was a deliberate choice to preserve modularity and flexibility over symbolic scale. In complex systems, scale without adaptability is not growth; it is fragility disguised as success.

What this experience ultimately taught us is uncomfortable for traditional management thinking. In complex economic ecosystems, you cannot engineer outcomes. You can only design conditions: boundaries, incentives, roles, and narratives that make constructive emergence more likely than destructive collapse. The platform mattered. The technology mattered. But what mattered more was the humility to accept that once an ecosystem becomes alive, you are no longer the architect standing outside the system. You are one of the agents operating within it.

The strategic lesson for C-level leadership is this. In times of systemic disruption, competitive advantage no longer lies primarily in having the most sophisticated product or the fastest execution. It lies in the capability to shape interaction spaces across institutions, sectors, and policy domains. Leadership shifts from control to stewardship. Strategy shifts from optimisation to navigation. And success is no longer measured only by ownership, but by whether the system you helped catalyse can survive, adapt, and continue to create value even when you step back.

That, ultimately, is what Padi UMKM represents. Not a digital product success story, but a case of how leadership, strategy, and technology can be recomposed to operate effectively in a complex, adaptive economy under crisis. It is an ecosystem in motion. It is Synergy in action.